Loading...

Loading...

Get started with QpiAI Pro today and unlock the full potential of AI for your organization.

LLM Deployment

Deploy foundational and fine-tuned LLMs effortlessly with QpiAI Pro, optimized for low-latency and scalable performance across cloud, hybrid, and edge infrastructures, empowering teams to move from fine-tuning to live inference quickly and reliably.

Get StartedLLM Deployement

QpiAI Pro enables enterprises to seamlessly create or upload datasets, launch experiments, monitor training in real time, and export deployment ready models from a unified no code platform. With built in AutoML optimizing every tuning workflow, teams achieve faster iteration cycles, higher model performance, and zero infrastructure overhead, delivering production grade AI at scale with unprecedented efficiency.

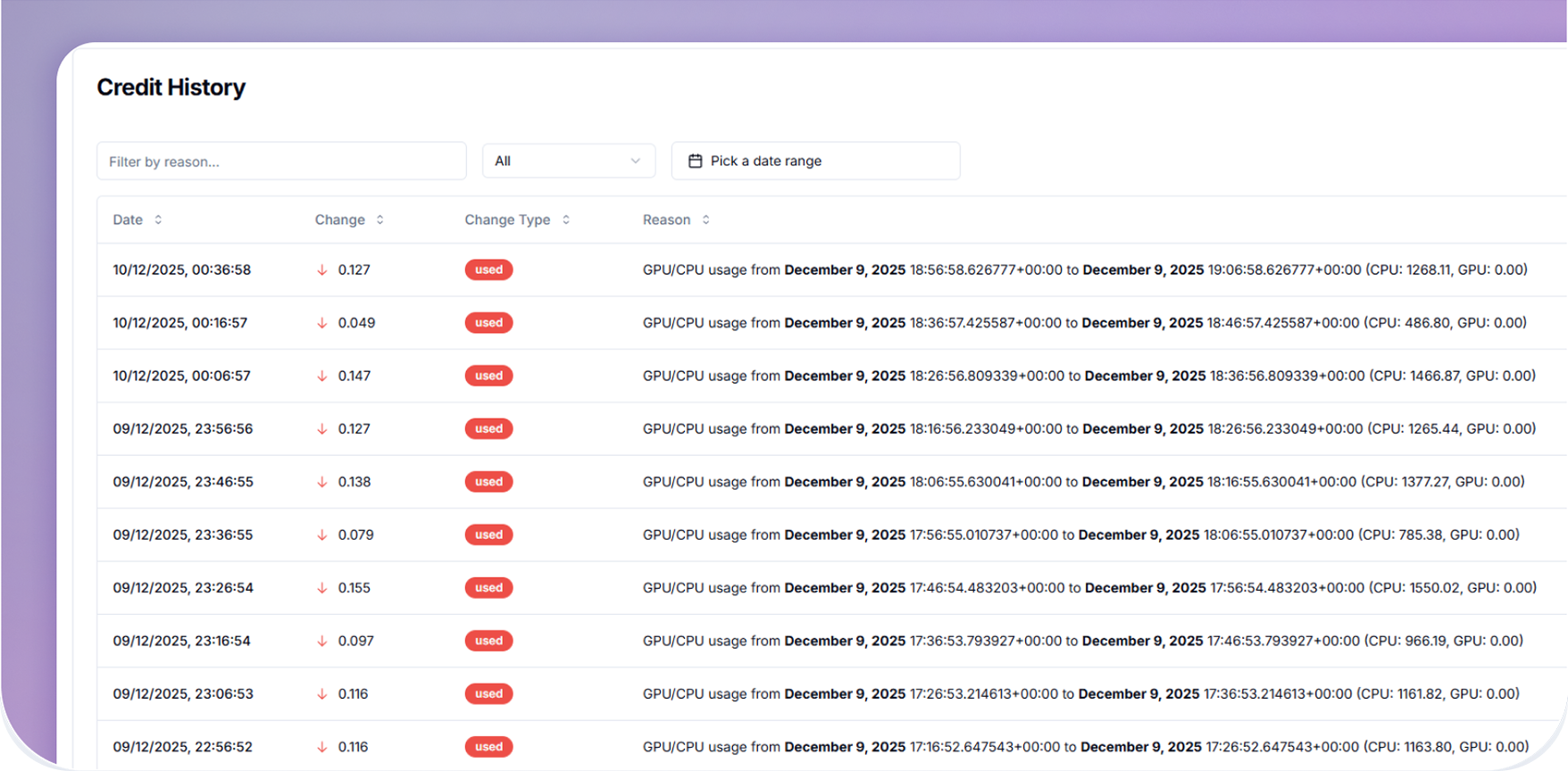

Leverage usage-based pricing and automated scaling to control inference spend and maximize ROI.

Dynamically adjust compute resources based on traffic, ensuring low latency and cost efficiency.

Instantly publish LLMs to production with no DevOps scripts or manual configurations.

Enforce encryption at rest and in transit, plus role-based access controls for compliance.

Leverage usage-based pricing and automated scaling to control inference spend and maximize ROI.

Dynamically adjust compute resources based on traffic, ensuring low latency and cost efficiency.

Instantly publish LLMs to production with no DevOps scripts or manual configurations.

Enforce encryption at rest and in transit, plus role-based access controls for compliance.