LLM RL Tuning

💡Optimizing Model Behavior with Reinforcement Learning (RL)

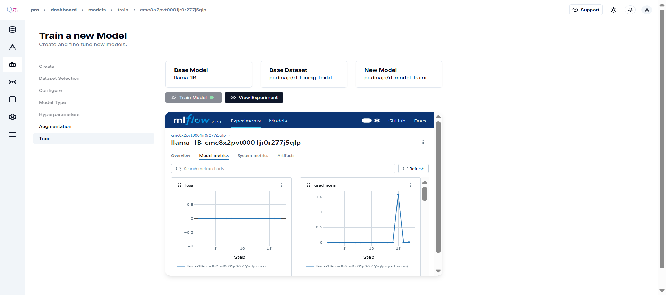

RL tuning empowers your LLM to learn from reward signals—specifically, verifiable correctness rewards based on final answers. QpiAI Pro uses Group Relative Policy Optimization (GRPO)—inspired by DeepSeek models—to fine-tune LLMs for improved performance on math and multiple-choice (MCQ) tasks, with a focus on accuracy-based evaluation metrics.

Similar Flow to Preference Tuning: The process remains the same as LLM Preference Tuning, with a few key differences:

-

Select “Train Model+” and create a repository.

-

Set Task Type to RL Tuning, instead of Preference or Instruction Tuning..

-

Choose a Pre-Uploaded RL Tuning Dataset, formatted as:

- Must be formatted as: input, output.

- Must be MCQ and Math Related question

-

Select a Model for Preference Tuning

- Llama-1B

- Llama-3B

- Qwen-1.5B

- Qwen-3B-Coder

- Configure settings and initiate training.

💡 Training Status Indicators:

-

🟡 Yellow – Training in progress

-

🟢 Green – Training completed successfully

-

🔴 Red – Training failed (retry or report issue)

💡 Once training is complete, your model is no longer just a straightforward answer generator—it becomes a reasoning-capable model. Through reinforcement learning with GRPO and verifiable rewards, the model naturally learns to structure its own chain-of-thought, self-verify mid-reasoning, and correct itself—transforming it into a true reasoning engine rather than merely outputting answers